tesseract::Parallel Class Reference

#include <parallel.h>

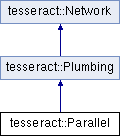

Inheritance diagram for tesseract::Parallel:

Public Member Functions | |

| TESS_API | Parallel (const char *name, NetworkType type) |

| StaticShape | OutputShape (const StaticShape &input_shape) const override |

| std::string | spec () const override |

| void | Forward (bool debug, const NetworkIO &input, const TransposedArray *input_transpose, NetworkScratch *scratch, NetworkIO *output) override |

| bool | Backward (bool debug, const NetworkIO &fwd_deltas, NetworkScratch *scratch, NetworkIO *back_deltas) override |

Public Member Functions inherited from tesseract::Plumbing Public Member Functions inherited from tesseract::Plumbing | |

| Plumbing (const std::string &name) | |

| ~Plumbing () override | |

| StaticShape | InputShape () const override |

| std::string | spec () const override |

| bool | IsPlumbingType () const override |

| void | SetEnableTraining (TrainingState state) override |

| void | SetNetworkFlags (uint32_t flags) override |

| int | InitWeights (float range, TRand *randomizer) override |

| int | RemapOutputs (int old_no, const std::vector< int > &code_map) override |

| void | ConvertToInt () override |

| void | SetRandomizer (TRand *randomizer) override |

| virtual void | AddToStack (Network *network) |

| bool | SetupNeedsBackprop (bool needs_backprop) override |

| int | XScaleFactor () const override |

| void | CacheXScaleFactor (int factor) override |

| void | DebugWeights () override |

| const std::vector< Network * > & | stack () const |

| void | EnumerateLayers (const std::string *prefix, std::vector< std::string > &layers) const |

| Network * | GetLayer (const char *id) const |

| float | LayerLearningRate (const char *id) |

| void | ScaleLayerLearningRate (const char *id, double factor) |

| void | SetLayerLearningRate (const char *id, float learning_rate) |

| float * | LayerLearningRatePtr (const char *id) |

| bool | Serialize (TFile *fp) const override |

| bool | DeSerialize (TFile *fp) override |

| void | Update (float learning_rate, float momentum, float adam_beta, int num_samples) override |

| void | CountAlternators (const Network &other, TFloat *same, TFloat *changed) const override |

Public Member Functions inherited from tesseract::Network Public Member Functions inherited from tesseract::Network | |

| Network () | |

| Network (NetworkType type, const std::string &name, int ni, int no) | |

| virtual | ~Network ()=default |

| NetworkType | type () const |

| bool | IsTraining () const |

| bool | needs_to_backprop () const |

| int | num_weights () const |

| int | NumInputs () const |

| int | NumOutputs () const |

| virtual StaticShape | InputShape () const |

| virtual StaticShape | OutputShape (const StaticShape &input_shape) const |

| const std::string & | name () const |

| virtual std::string | spec () const |

| bool | TestFlag (NetworkFlags flag) const |

| virtual bool | IsPlumbingType () const |

| virtual void | SetEnableTraining (TrainingState state) |

| virtual void | SetNetworkFlags (uint32_t flags) |

| virtual int | InitWeights (float range, TRand *randomizer) |

| virtual int | RemapOutputs (int old_no, const std::vector< int > &code_map) |

| virtual void | ConvertToInt () |

| virtual void | SetRandomizer (TRand *randomizer) |

| virtual bool | SetupNeedsBackprop (bool needs_backprop) |

| virtual int | XScaleFactor () const |

| virtual void | CacheXScaleFactor (int factor) |

| virtual void | DebugWeights ()=0 |

| virtual bool | Serialize (TFile *fp) const |

| virtual bool | DeSerialize (TFile *fp)=0 |

| virtual void | Update (float learning_rate, float momentum, float adam_beta, int num_samples) |

| virtual void | CountAlternators (const Network &other, TFloat *same, TFloat *changed) const |

| virtual void | Forward (bool debug, const NetworkIO &input, const TransposedArray *input_transpose, NetworkScratch *scratch, NetworkIO *output)=0 |

| virtual bool | Backward (bool debug, const NetworkIO &fwd_deltas, NetworkScratch *scratch, NetworkIO *back_deltas)=0 |

| void | DisplayForward (const NetworkIO &matrix) |

| void | DisplayBackward (const NetworkIO &matrix) |

Additional Inherited Members | |

Static Public Member Functions inherited from tesseract::Network Static Public Member Functions inherited from tesseract::Network | |

| static Network * | CreateFromFile (TFile *fp) |

| static void | ClearWindow (bool tess_coords, const char *window_name, int width, int height, ScrollView **window) |

| static int | DisplayImage (Image pix, ScrollView *window) |

Protected Member Functions inherited from tesseract::Network Protected Member Functions inherited from tesseract::Network | |

| TFloat | Random (TFloat range) |

Protected Attributes inherited from tesseract::Plumbing Protected Attributes inherited from tesseract::Plumbing | |

| std::vector< Network * > | stack_ |

| std::vector< float > | learning_rates_ |

Protected Attributes inherited from tesseract::Network Protected Attributes inherited from tesseract::Network | |

| NetworkType | type_ |

| TrainingState | training_ |

| bool | needs_to_backprop_ |

| int32_t | network_flags_ |

| int32_t | ni_ |

| int32_t | no_ |

| int32_t | num_weights_ |

| std::string | name_ |

| ScrollView * | forward_win_ |

| ScrollView * | backward_win_ |

| TRand * | randomizer_ |

Detailed Description

Definition at line 26 of file parallel.h.

Constructor & Destructor Documentation

◆ Parallel()

| tesseract::Parallel::Parallel | ( | const char * | name, |

| NetworkType | type | ||

| ) |

Definition at line 34 of file parallel.cpp.

36}

Member Function Documentation

◆ Backward()

|

overridevirtual |

Implements tesseract::Network.

Definition at line 113 of file parallel.cpp.

114 {

115 // If this parallel is a replicator of convolvers, or holds a 1-d LSTM pair,

116 // or a 2-d LSTM quad, do debug locally, and don't pass the flag on.

118#ifndef GRAPHICS_DISABLED

119 DisplayBackward(fwd_deltas);

120#endif

121 debug = false;

122 }

125 // Special case, run parallel in parallel.

126 std::vector<NetworkScratch::IO> in_deltas(stack_size);

127 std::vector<NetworkScratch::IO> out_deltas(stack_size);

128 // Split the forward deltas for each stack element.

129 int feature_offset = 0;

132 in_deltas[i].Resize(fwd_deltas, num_features, scratch);

134 in_deltas[i]->CopyUnpacking(fwd_deltas, feature_offset, num_features);

135 feature_offset += num_features;

136 }

137#ifdef _OPENMP

138# pragma omp parallel for num_threads(stack_size)

139#endif

142 }

145 back_deltas->AddAllToFloat(*out_deltas[i]);

146 }

147 }

148 } else {

149 // Revolving partial deltas.

150 NetworkScratch::IO in_deltas(fwd_deltas, scratch);

151 // The sum of deltas from different sources, which will eventually go into

152 // back_deltas.

153 NetworkScratch::IO out_deltas;

154 int feature_offset = 0;

157 in_deltas->CopyUnpacking(fwd_deltas, feature_offset, num_features);

158 feature_offset += num_features;

161 out_deltas.ResizeFloat(*back_deltas, back_deltas->NumFeatures(), scratch);

162 out_deltas->CopyAll(*back_deltas);

163 } else if (back_deltas->NumFeatures() == out_deltas->NumFeatures()) {

164 // Widths are allowed to be different going back, as we may have

165 // input nets, so only accumulate the deltas if the widths are the

166 // same.

167 out_deltas->AddAllToFloat(*back_deltas);

168 }

169 }

170 }

172 back_deltas->CopyAll(*out_deltas);

173 }

174 }

176 back_deltas->ScaleFloatBy(1.0f / stack_size);

177 }

179}

void DisplayBackward(const NetworkIO &matrix)

Definition: network.cpp:341

bool Backward(bool debug, const NetworkIO &fwd_deltas, NetworkScratch *scratch, NetworkIO *back_deltas) override

Definition: parallel.cpp:113

◆ Forward()

|

overridevirtual |

Implements tesseract::Network.

Definition at line 52 of file parallel.cpp.

53 {

54 bool parallel_debug = false;

55 // If this parallel is a replicator of convolvers, or holds a 1-d LSTM pair,

56 // or a 2-d LSTM quad, do debug locally, and don't pass the flag on.

58 parallel_debug = true;

59 debug = false;

60 }

63 // Special case, run parallel in parallel.

64 std::vector<NetworkScratch::IO> results(stack_size);

67 }

68#ifdef _OPENMP

69# pragma omp parallel for num_threads(stack_size)

70#endif

73 }

74 // Now pack all the results (serially) into the output.

75 int out_offset = 0;

79 }

80 } else {

81 // Revolving intermediate result.

82 NetworkScratch::IO result(input, scratch);

83 // Source for divided replicated.

84 NetworkScratch::IO source_part;

85 TransposedArray *src_transpose = nullptr;

87 // Make a transposed copy of the input.

88 input.Transpose(&transposed_input_);

89 src_transpose = &transposed_input_;

90 }

91 // Run each network, putting the outputs into result.

92 int out_offset = 0;

95 // All networks must have the same output width

98 } else {

100 }

101 out_offset = output->CopyPacking(*result, out_offset);

102 }

103 }

104#ifndef GRAPHICS_DISABLED

105 if (parallel_debug) {

107 }

108#endif

109}

void DisplayForward(const NetworkIO &matrix)

Definition: network.cpp:333

◆ OutputShape()

|

overridevirtual |

Reimplemented from tesseract::Network.

Definition at line 40 of file parallel.cpp.

◆ spec()

|

inlineoverridevirtual |

Reimplemented from tesseract::Network.

Definition at line 36 of file parallel.h.

36 {

37 std::string spec;

39 // We have 4 LSTMs operating in parallel here, so the size of each is

40 // the number of outputs/4.

43 // We have 2 LSTMs operating in parallel here, so the size of each is

44 // the number of outputs/2.

47 } else {

49 }

50 } else {

53 } else {

55 spec += it->spec();

56 }

57 }

59 }

61 }

The documentation for this class was generated from the following files:

- /media/home/debian/src/github/tesseract-ocr/tesseract/src/lstm/parallel.h

- /media/home/debian/src/github/tesseract-ocr/tesseract/src/lstm/parallel.cpp